In This Article

Using AI for marketing is filled with minefields, so let’s dig into some typical privacy concerns with AI, and how to deal with them in your marketing.

You already know that AI technology is everywhere now – writing marketing copy, answering customer service questions, and even composing music. But this explosion of artificial intelligence brings with it a shadow: privacy issues are growing alongside its capabilities.

This all sounds like science fiction, but honestly, these AI privacy concerns are real-world issues we need to address today, not tomorrow. From the data AI systems collect to the potential for bias and discrimination, the implications of AI on privacy are far-reaching. We need to understand these challenges to harness AI’s power while safeguarding our rights and freedoms.

The Data Dilemma: How AI Eats Information (and Why It Matters)

At its core, AI is a hungry beast that needs to collect data, learn from it, and use it to make decisions. The more data you feed it, the “smarter” it gets. This data can be anything: your online searches, your social media posts, your location history, your medical records…the list goes on.

For example, remember that time you searched for the “best pizza near me”? AI systems record and remember this information, along with what websites you visit and how long you linger on certain pages. Then they combine these data points, creating an increasingly accurate picture of your likes, dislikes, and habits – even predicting what you might buy or do in the future.

But these privacy issues with AI are a double-edged sword; as a consumer, you want to protect your privacy (without missing out on the benefits of artificial intelligence). As a business owner, you want artificial intelligence to put your business in front of your ideal customers.

So how do you balance the protection of personal data with AI privacy?

The Privacy Paradox: Personalized Experiences & Personal Data Risks

Now, some might say, “But wait, doesn’t AI give us cool, personalized experiences?” Absolutely. It powers those movie recommendations we love, helps filter out spam, and even assists doctors in diagnosing diseases earlier.

The problem is, these amazing capabilities come at a price – the information AI needs to give us what we want is also the same sensitive data that, if misused or mishandled, can be turned against us. Imagine your data falling into the wrong hands. This is where the potential for privacy breaches and the need for robust data protection become glaringly apparent.

Think about an insurance company. Using AI, they could analyze your social media activity, your online purchases, and other data. If they decide you’re engaging in risky behavior, they could increase your insurance premiums.

They might even deny you coverage altogether. And without proper regulations and transparency, you might never even know why. This is where AI privacy issues get serious.

Facial Recognition & AI Surveillance: Are We Trading Liberty for Convenience?

Facial recognition technology powered by AI, which often relies on biometric data, is another area where privacy concerns are growing rapidly. In some cities, you’ll now find these systems used by law enforcement for identification and even predictive policing. This use of AI techniques raises fundamental questions about the balance between security and freedom in a technologically advanced society.

On one hand, proponents argue these systems can help catch criminals and increase public safety. On the other, critics point out the lack of transparency, the potential for misuse and discrimination, and the chilling effect this type of pervasive surveillance can have on our fundamental freedoms.

This is especially concerning given that AI systems are often opaque in their decision-making processes, making it difficult to challenge or understand their conclusions.

Think back to 2017, when the Economist found that democracy was on the decline in many countries because people lost faith in their government institutions. They pointed directly at events like the Cambridge Analytica scandal, where AI was used to manipulate voters during the 2016 US election using highly specific psychographic profiles of Facebook users.

They suggested this erosion of trust isn’t an isolated incident, but a disturbing pattern – arguing that if AI continues down this road of “manipulating democracy’s levers,” we could see a further decline in trust and a rise in authoritarianism. Pretty scary stuff.

Bias In, Bias Out: The Problem of AI Discrimination

Now, this is where things get tricky and raise significant privacy concerns. People often think of AI as a perfectly objective system. However, because AI learns from the data we feed it, any biases that exist in that data get reflected and even amplified in the AI’s decision-making.

This raises serious concerns about the potential for AI to perpetuate existing social inequalities, particularly if these systems are used to make decisions about individuals’ lives.

A well-known example is the case where IBM used almost a million photos from Flickr to train their facial recognition software without the permission of the people in the photos. They argued the photos were public but the critics pointed out the secondary use harm as the photos were shared on Flickr for something else.

This wasn’t an isolated incident. There have been many examples of facial recognition systems that struggle to correctly identify people of color, leading to serious concerns about racial bias in AI algorithms. The AI Now Institute’s 2017 report highlighted the dangers of this, warning that without serious effort to address bias in data and algorithms, these technologies could “reinforce existing inequalities” and do more harm than good. It makes you really wonder what the future holds.

The “Black Box” Problem & the Need for Transparency

Here’s another challenge – the more sophisticated AI becomes, the less transparent it is, even to those who created it, raising important data privacy questions. This is what some researchers refer to as the “black box” problem. This lack of transparency raises important questions about accountability and the potential for AI systems to operate without adequate oversight.

Basically, AI algorithms can be so complex that even their developers sometimes struggle to fully understand how they work. If an AI makes a decision about a loan application, a job interview, or even a medical diagnosis, it can be difficult or impossible to know exactly what factors influenced that decision. This lack of explainability is a significant barrier to building trust in AI systems, particularly when those systems are used to make high-stakes decisions

that impact individuals’ lives.

In his 2017 article in the MIT Technology Review, Will Knight pointed out this lack of explainability as one of AI’s biggest challenges, arguing that “if we want to be able to use AI in areas like healthcare, the legal system, or finance, where trust and accountability are essential, we need AI systems that are not only effective, but also explainable.” It’s tough to trust something if we don’t understand how it works, isn’t it?

Navigating the AI Frontier: Balancing Innovation & Privacy

Now, this doesn’t mean we need to hit the brakes on AI development altogether. Emerging technologies like AI have enormous potential for good and the potential to transform our lives in positive ways. From improving healthcare outcomes to addressing climate change, the applications of AI are vast and potentially transformative.

But we can’t just ignore these privacy concerns either. We need to develop AI ethically and responsibly. It’s up to us – individuals, policymakers, and tech leaders – to work together, strike the right balance, and ensure that AI development serves humanity, protects our rights, and reflects our values. Here’s how:

Transparency & Explainability: Shedding Light on the Black Box

First and foremost, we need more transparency in AI development, especially in the use of AI tools. That means making sure the decision-making processes of AI are as understandable and explainable as possible. This transparency is crucial for building trust in AI systems and ensuring that they are used fairly and responsibly. After all, if we don’t understand how AI systems are making decisions, how can we trust those decisions or hold the systems accountable?

Think about requiring AI developers to provide clear documentation and justification for the decisions made by their systems. Also, exploring the potential of “explainable AI,” which focuses on developing algorithms that can be understood even by those without specialized knowledge. Transparency is essential, especially as AI becomes increasingly integrated into our lives.

Data Protection & Security: Building Trust through Responsible Stewardship

You know what they say, “Data is the new oil”. And just like with any precious resource, we need to handle data sharing with care and respect. It is critical that AI development prioritizes strong data security measures, including things like robust encryption and anonymization techniques to protect personal information. This means ensuring that data is collected, stored, and processed securely, and that individuals have control over how their data is used.

Here’s an example: Australia’s Data61 and CSIRO are at the forefront of privacy preservation, constantly innovating in this area. Their groundbreaking work shows how to enable sophisticated analysis while safeguarding sensitive information. These initiatives highlight the importance of embedding privacy considerations into the development and deployment of AI technologies. By designing systems with privacy in mind, we can mitigate risks and foster greater trust in AI applications.

This should be the standard. By embedding these security practices into AI development, organizations will improve trust and minimize the risks associated with data breaches or unauthorized access.

Regulation & Ethical Frameworks: Shaping AI for Good with General Data Protection Regulation

Regulation is always a bit of a touchy subject. While AI regulations need to be put in place to help prevent misuse and ensure that AI development is guided by ethical principles, too much regulation can stifle innovation. Finding the right balance between fostering innovation and protecting fundamental rights is crucial for ensuring that AI technologies are developed and deployed responsibly.

But listen to this – remember when Europe rolled out the GDPR (General Data Protection Regulation)? That sent shockwaves throughout the tech world. All of a sudden, user privacy moved to the front burner. It was a game changer. The GDPR served as a wake-up call, demonstrating the need for comprehensive privacy legislation in the digital age.

Similarly, the California Consumer Privacy Act (CCPA) gives Californians the right to know what personal information is collected about them and to refuse the sale of their personal data.

It’s really all about finding the right balance. AI regulations should be clear, comprehensive, and flexible enough to adapt to this fast-evolving field, all while striking that crucial balance.

The good news?

More and more countries and organizations are starting to recognize the urgency of creating ethical frameworks for AI. Take the EU AI Act. Their efforts are a positive step, urging companies to integrate privacy protections right from the design phase – that’s the idea behind “privacy by design,” ensuring consumer rights are hardwired into these systems from the ground up.

Here’s a List of AI Privacy Laws That You Can Reference

- General Data Protection Regulation (GDPR) – European Union: The official GDPR text and additional resources can be found on the European Commission website.

- California Consumer Privacy Act (CCPA) and California Privacy Rights Act (CPRA): More information can be found on the State of California Department of Justice website.

- EU AI Act: Details about the proposed regulation on artificial intelligence in the EU can be accessed on the European Commission’s digital strategy page.

- Colorado Privacy Act: You can find more about this act on the Colorado Attorney General’s Office website.

- Connecticut Data Privacy Act: Information is available on the Connecticut General Assembly website.

- Virginia Consumer Data Protection Act: Details are provided by the Virginia State Government.

- Utah Consumer Privacy Act: The Utah State Legislature provides information on their official website.

- Delaware Personal Data Privacy Act: More details can be found on the Delaware General Assembly website.

- Indiana Data Privacy Act: For more information, visit the Indiana General Assembly website.

- Montana Consumer Data Privacy Act: Information is available on the Montana State Legislature website.

- Oregon Consumer Privacy Act: You can learn more on the Oregon Legislative Information System.

- Tennessee Information Protection Act: Details are available on the Tennessee General Assembly website.

- Texas Data Privacy and Security Act: Visit the Texas Legislature Online.

- New York City’s Local Law 144 (regulating AI in hiring): More information is provided by the New York City government website.

- Illinois Biometric Information Privacy Act (BIPA): Information can be found on the Illinois General Assembly website.

- Washington State’s working group on automated decision-making systems: Details are available on the Washington State Legislature website.

User Education & Empowerment: Taking Control of Our Digital Footprints

Privacy, my friend, isn’t just about laws and regulations. It’s also about us understanding AI and data privacy. We need to educate ourselves about AI. It’s critical to be aware of the ways it collects and uses data. This includes understanding how our data is used to train AI algorithms, the potential implications for privacy, and the steps we can take to protect our personal information in an increasingly data-driven world.

Did you know that even though those policies often bury this info in dense legalese, almost every website and app we use collects our information. Seriously, check out the privacy settings on your phone – it’s eye-opening how much control we give away without realizing it. Understanding the choices we make about our data is paramount in the age of AI.

But here’s the empowering part – by understanding the basics of AI and taking control of our data, making conscious choices about what information we share and with whom, we’re better equipped to protect our privacy and advocate for more responsible AI development. Just remember, knowledge is power.

What’s Your Responsibility as a Business Owner Regarding Generative AI

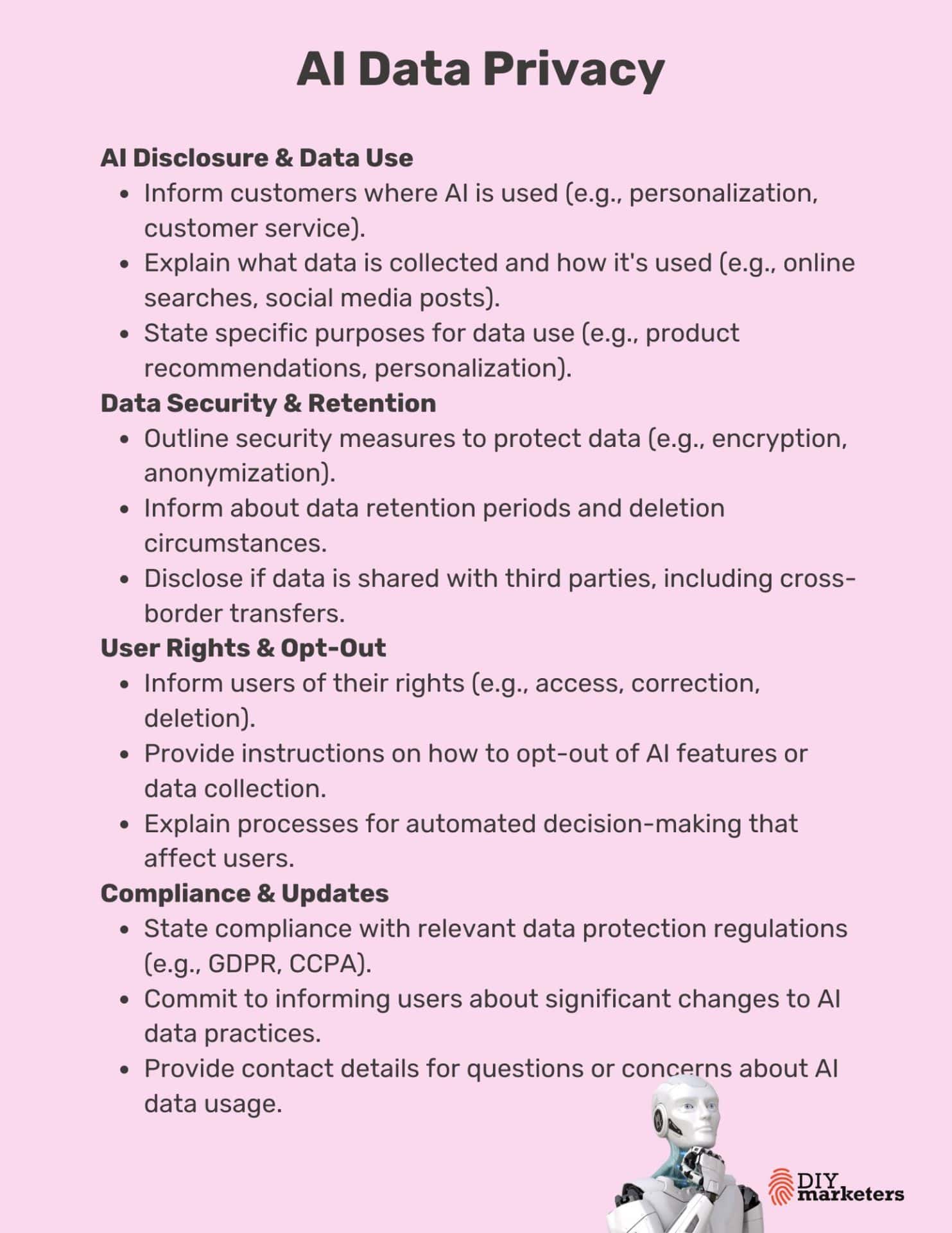

Based on current laws and best practices, if you’re using AI in your content and data processing you should be sharing the following with your audience to alleviate any privacy concerns with ai:

-

AI disclosure:Tell your audience where AI is being used to personalize experiences or process customer data. Transparency builds trust.

-

Data collection: Explain what data is being collected and how it’s being used to train or power AI systems. This includes direct and inferred data.

-

Purpose of data use: Clearly state the purpose of the data being used in AI systems, e.g. personalization, product recommendations or content generation.

-

Data retention: Tell your audience how long their data will be retained and used for AI purposes and under what circumstances it will be deleted.

-

Opt-out: Provide clear instructions on how customers can opt out of AI powered features or data collection for AI training if applicable.

-

Data security: Outline the security measures in place to protect customer data used in AI systems from breaches or unauthorised access.

-

Third party sharing: Disclose if and how data used for AI purposes will be shared with third parties, including data transfers across borders.

-

AI decision making: If AI is used for automated decision making that affects users significantly, explain the process and how it works.

-

User rights: Inform users of their rights to their data, e.g. right to access, correct or delete their data used in AI systems.

-

Compliance: Clearly state compliance with relevant data protection regulations like GDPR, CCPA or other applicable laws.

-

AI practice updates: Commit to telling users about significant changes to AI data practices or policies.

-

Contact: Provide clear contact details for users who have questions or concerns about AI data usage.

Please note: laws and regulations may vary by region. E.g. under GDPR in the EU this is more stringent.

FAQs About Privacy Concerns with AI

What Are the Biggest Threats to Privacy in the Age of AI?

Several major privacy risks are associated with AI technology:

-

Data Collection & Surveillance: The potential for massive data collection and surveillance through AI-powered systems raises concerns about how this data might be used and by whom. It’s not just governments but corporations as well that now have access to increasingly sophisticated tools for tracking, analyzing, and potentially manipulating our behavior.

-

Data Security and Breaches: Storing huge amounts of personal data in AI systems creates attractive targets for hackers and malicious actors, potentially leading to massive data breaches and identity theft. As these systems become more complex and interconnected, ensuring their security becomes more difficult, raising the stakes significantly.

-

Bias and Discrimination: AI algorithms trained on biased data can perpetuate and amplify existing inequalities. If we’re not extremely careful, AI systems could deny individuals opportunities or subject them to unfair treatment. Think about things like loan applications, job interviews, and even criminal justice. Imagine if an algorithm, rather than a person, made life-altering decisions with that amount of bias. That’s the future we need to prevent.

What Can Organizations Do to Address AI Privacy Concerns?

Protecting user data privacy shouldn’t be an afterthought – it should be the foundation. For AI to reach its full potential, we need public trust. Here’s how organizations can build that trust and do right by their users. These are some important first steps.

Add Privacy By Design (PbD) principles into AI systems.This isn’t about bolting on privacy measures later, but making privacy a fundamental element in the design and development process. It requires organizations to minimize data collection and retention, ensuring data is used only for its intended purposes and that users understand how their information is processed. It’s about prioritizing data minimization from the start.

-

Increased trust among users

-

Reduced legal and regulatory risks

-

Improved data security overall

Promote Transparency and Explainability in AI: It’s not enough to just “say” you’re being responsible. It’s about providing users with understandable information about how AI systems make decisions, potentially including summaries of data lineage (where the data came from), model bias assessments, and performance metrics. It’s like showing your work – it builds credibility and helps people feel more comfortable with how these systems operate.

-

Builds greater user trust

-

Facilitates better regulatory compliance

-

Reduces potential harm from biased outcomes.

Are There Ethical or Moral Issues With AI?

Besides specific privacy violations, emerging technologies like artificial intelligence also introduce some seriously complex ethical and moral issues that often intertwine with concerns about its impact on society. They’re not always easy to untangle. Here’s the thing: as we inch closer to creating machines that mimic or even surpass human intelligence in certain areas, it’s not just a technological shift we’re talking about – it’s an existential one. We’re grappling with fundamental questions about what it means to be human and the role we envision for artificial intelligence in shaping our future.

Conclusion

Listen, privacy concerns with AI and data privacy aren’t just about hypothetical scenarios or futuristic fears. This is about today – the choices we’re making right now, and how those choices shape our world for better or worse. The information I shared today is a wake-up call for every single one of us. We must make conscious decisions about the technologies we create and embrace, all while ensuring our journey into the age of artificial intelligence prioritizes the values we hold dear – values like human dignity, fairness, and of course, privacy.